As more organizations unlock the power of AI, having the right infrastructure is key to turning innovation into real results

As AI adoption accelerates, many organizations discover their infrastructure simply can’t support the scale, speed, or visibility AI demands.

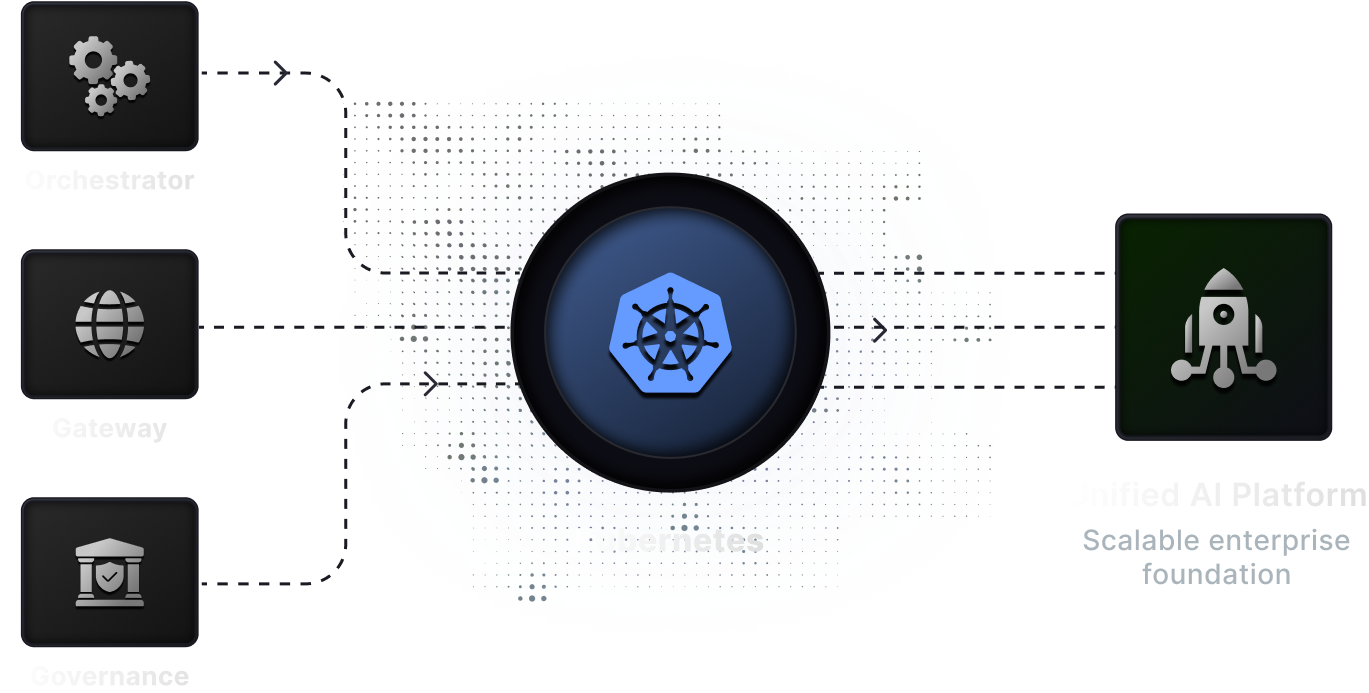

Our managed Kubernetes AI stack brings delivery, inference, and LLM integration together - turning fragmented infrastructure into a unified, governed, and scalable foundation for enterprise AI.

Build smarter models by turning raw data into optimized intelligence through scalable, GPU-accelerated training pipelines.

Deliver real-time predictions at scale - with intelligent routing, autoscaling, and cost-aware GPU orchestration.

Transform language models into real business value - powering chatbots, copilots, and AI-driven workflows with enterprise guardrails.

Prepare, transform, and stream the data that fuels AI - securely, efficiently, and across any cloud or source.

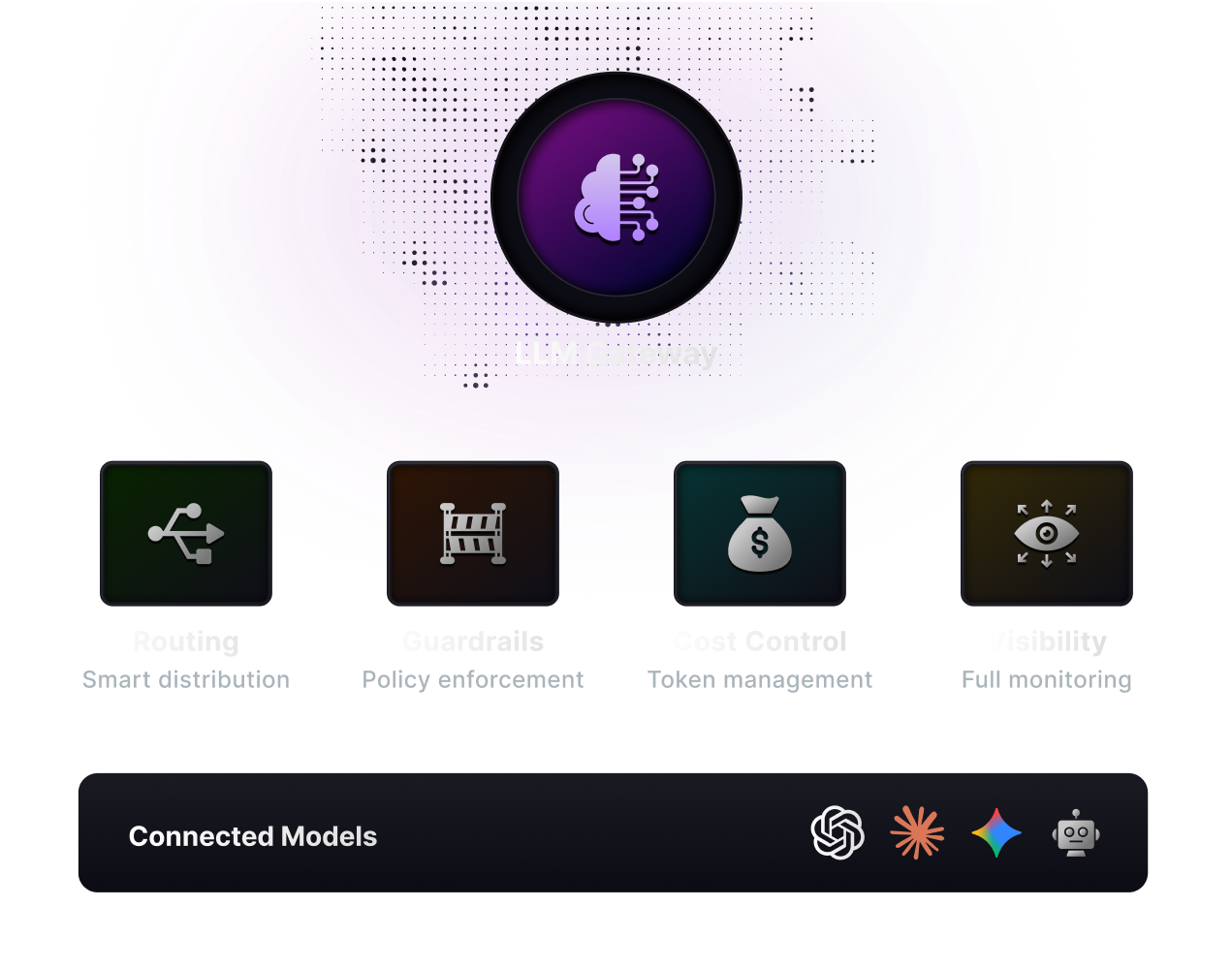

Simplify LLM integration with a gateway that handles routing, policy, and cost control out of the box. Run multiple models, manage tokens, and scale inference - without rewriting your stack.